The underlying principle of big data technologies is, to operate on an ever-growing volume of data that must be stored and accessed, processed and analyzed and then, delivered to multiple users with velocity, in variety of formats.

Hadoop with Map-R has proven itself as an ideal platform to support complex batch operations on huge amount of data at a very low cost. However, use of persistent storage to provide fault tolerance and single-pass computation model makes MapReduce an incorrect fit for low-latency applications and iterative computations. One possible solution to overcome this challenge can be:

- In case of low-latency computations, bring the working dataset in memory and then perform computations at memory speeds, and

- Efficient iterative algorithm- by having subsequent iterations sharing data through memory, or repeatedly accessing the same dataset

In other words, this approach is no different than operational principles of In-Memory Computing. Gartner defines In-Memory Computing (IMC) as a computing style in which the primary data store for applications (the “data store of records”) is the central (or main) memory of the computing environment (on single or multiple networked computers) running these applications.

I think, further we can also observe that In-Memory Computing is a close relative of In-Memory Databases. In-Memory-based databases and caching products have been available for over a decade and so far they have been used in a fairly small niche in the data management solution market. The technology advancement in the industry in both hardware and software architecture, makes memory-based computing more relevant today than in the past, as predicted in the Moore’s law i.e. “Memory prices continue to slide coupled with a rise in the number of processors on the chip”.

In my opinion, not all applications will always need to tackle three V’s of Big Data paradigm and In-Memory computing stands apart in handling considerable intersection between volume and velocity. To clarify this further, in the recent past I happened to be part of design activity of similar business scenario. The problem statement was to build a hyper responsive bidding application in Sports domain which needed to support millions of Bids within few seconds based on different states of ongoing live event/games. In this case we needed to support large volume [number of bids per event] at a rapid velocity [users bidding simultaneously for given event in fraction of seconds], variety aspect was almost not applicable which I think is a perfect example of IMC use case. Further, while evaluating IMC during this implementation I have come across some ideal cases/business scenarios for using IMC approach, list is as below:

- Batch process targeted real-time data processing at in-memory speed

- Extreme consistency needs at high speed on huge data

- Low latency computations

- Applications needing faster batch and iterative algorithm implementations

- Applications that provide intensive analytics like data mining, forecasting, security intelligence, customer relationship management, supply chain planning etc.

- Applications requiring complex event processing like predictive monitoring, intelligent metering, fraud and risk management

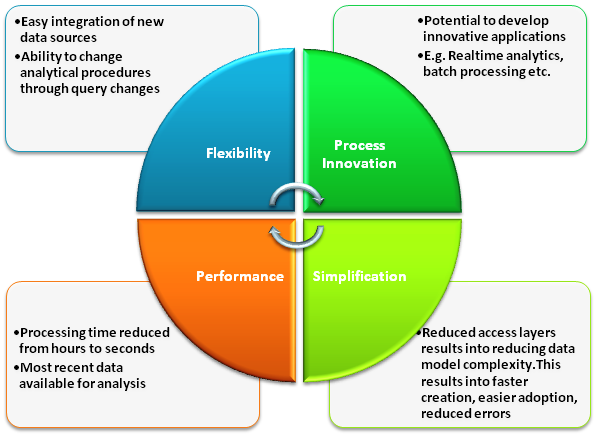

If we try to find a common link in the above set of requirements/applications, it helps us figure out the key benefits of IMC. In my opinion these benefits are, Better Performance, Process Innovation, Added Flexibility and Simplification. Performance and innovation helps in differentiating application from competition, added flexibility and simplicity results into reduced total cost of ownership.

As we can see, IMC definitely has the potential to solve complex analytical and advanced business intelligence requirements. However, as IMC is not yet widely spread, to successfully utilize power of IMC; application architectures will need a clear strategy for all the steps right from evaluation to implementation.