The use of data science capabilities works wonders when strategically implemented for enterprise requirements. Rapid decision-making, generating meaningful insights for future enterprise expansion and forecasting business issues are some of the features associated with data science. Let us look into the project life cycle of data science workflow and understand how it enables enterprises to make efficient use of data.

Implementation of data science workflow enables enterprises to be equipped with business insights. The CRISP-DM model (Cross Industry Standard Process for Data Mining) which defines an industry-wide specification for workflows on data mining projects is widely used by enterprises (data scientists) to tackle business problems. Data science projects follow a similar workflow, with some changes to accommodate for the different specifications of the project.

Before embarking on a data science project, it is important to be able to define a data strategy. This strategy can be formulated by answering some fundamental questions:

1. What are the business requirements/strategic goals?

2. What information is required to achieve a particular goal?

3. Is this information available in our data?

4. How robust and accurate is the information extracted from data?

To answer these questions, data scientists must work with subject matter experts to understand the business processes and the data architecture.

Having created a data strategy and verified its feasibility, the data science system creation is reduced to an engineering problem with a defined sequence of steps and an exit strategy.

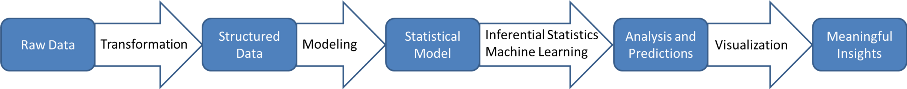

Raw data:

This data exists in the current data architecture and it can take the form of unstructured data (video, text), relational databases, CSV files, NoSQL databases, etc. This data cannot be used directly for the purposes of the project since it is usually not in a suitable format.

Transformation:

Raw data needs to be converted into structured data for building models. This transformation can range from simple conversions of data types to sophisticated algorithms to fill in missing values and eliminate outliers. Furthermore, the specific type of transformation required totally depends on the need of the project.

Structured data:

Structured data is ready to be used to build models. A structured dataset contains a sufficiently large number of datasets, with no missing values or incorrect data points, and with a sufficient number of dimensions to ensure that meaningful insights can be extracted. The format of this structured data depends on the goals of the project.

Modeling:

Using structured data, we create a model that formalizes the relationships between the dimensions of our data points, thereby explaining the data-generating processes in an idealized form. To model the data, data scientists often choose to focus on only specific portions of the total data available to them and apply feature engineering to improve the quality of the models. The particular method chosen for modelling depends on the goals defined for the project and for the implemented data strategy as well as the nature of the data itself.

Statistical model:

The statistical model gives insights into the processes that generate the data from which it is created. The model describes relationships between the various dimensions of the data under consideration, and can be used to derive insights about the data.

Inferential statistics and machine learning:

In order to extract meaning from data, it is necessary to use the models we have created to draw out inferences about the data, or use machine learning technology to automate the process of decision-making based on insights from data. This is the value-addition provided in a data science project.

Analysis and predictions:

Using inferential statistics or machine learning, we derive meaningful information from our data. Depending on the data strategy, this can take the form of reports, dashboards, prediction systems, etc.

Visualization:

This is the process of presenting information/data to stakeholders in a meaningful way, in the form of easily understandable, accurate and actionable insights. In case the goal is to build a prediction system, the visualization of data is less important, but the stakeholders must nevertheless have the capacity to monitor the prediction system to ensure its accuracy.

Meaningful insights:

This is the end goal of the data science project, as defined by the data strategy. The insights must be in line with the strategic aims of the enterprise.

Data science in conjunction with its newer subsets like Artificial Intelligence and Machine Learning will further fuel its widespread adoption amongst organizations big and small alike. Harbinger systems is hosting a webinar “Discover the Potential of Your Data with Machine Learning” on May 12th, 2016, 10 am PST. Join us for this engaging webinar on machine learning to explore how to use machine learning on enterprise data, along with the tools and technologies needed, followed by some interesting real world use cases.